Local LLMs vs Cloud-Based AI: The Complete Business Guide to Choosing Your AI Automation Strategy

The artificial intelligence revolution is transforming how businesses operate, but choosing the right AI deployment strategy can feel overwhelming. Should you run large language models (LLMs) locally on your own servers using tools like LM Studio or Ollama, or rely on cloud-based solutions like ChatGPT, Claude, or Gemini?

This decision impacts everything from your data security to your monthly costs, and the wrong choice could expose your business to unnecessary risks or limit your growth potential. Let’s break down everything you need to know to make an informed decision.

What Are Local vs Cloud-Based LLMs?

Local LLMs are AI models that run entirely on your own systems, whether that’s your office servers, desktop computers, or private cloud infrastructure. Popular tools like LM Studio, Ollama, and GPT4All make it possible to download and run powerful AI models without sending any data to external companies.

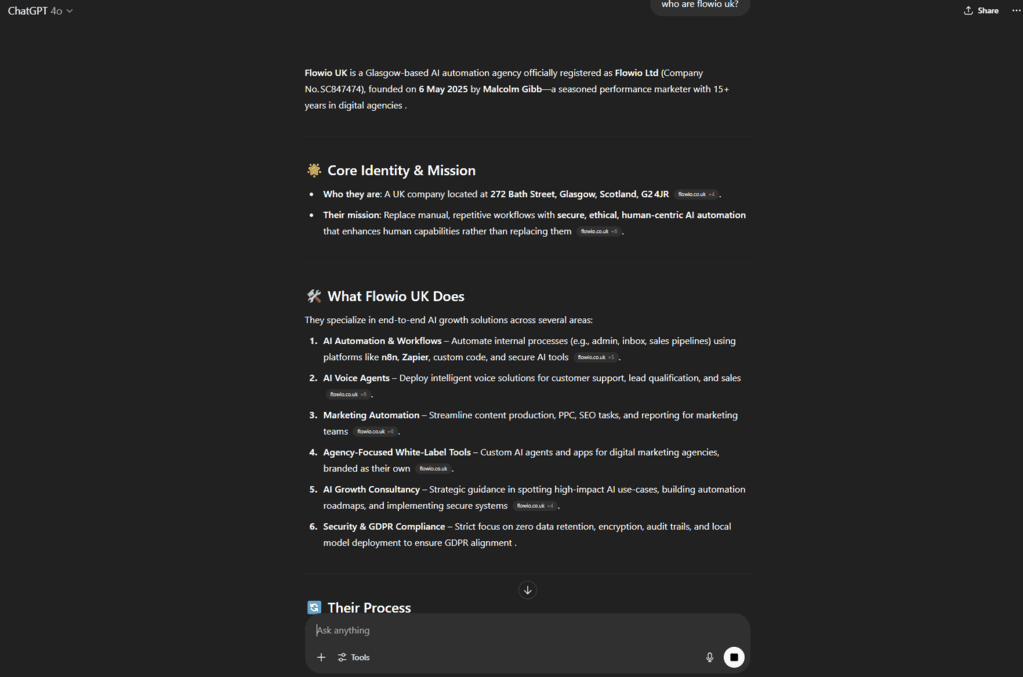

Cloud-based LLMs are AI services you are already probably familiar with, hosted by major tech companies like OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini), and Microsoft (Copilot). You access these through APIs or web interfaces, and your data is processed on their servers.

The Security and Privacy Divide

For many businesses, data security and privacy are the most critical factors in this decision. Given that, in the UK we must ensure that we have proper data retention and GDPR policies, amongst a range of other security protocols and policies – security needs to be front of mind when considering any AI interactions within AI automation in your business.

Local LLMs: Complete Data Control

When you run LLMs locally, your data never leaves your premises. Every document, conversation, and piece of sensitive information stays within your controlled environment. This approach offers:

- Zero data transmission to external servers

- Complete audit trails of how your data is used

- No third-party access to your business information

- Compliance-friendly setup for regulated industries

For example, a law firm processing confidential client documents can use local LLMs to summarise contracts and research cases without any risk of privileged information being exposed to external parties.

A mortgage broker could process customer financial applications using a local LLM to summarise top clients, most high value customers and lengthy types of applications without any risk of personal information being exposed outside of their business.

Cloud-Based LLMs: Varying Privacy Policies

Cloud providers have different approaches to data handling:

OpenAI (ChatGPT): Business customers (Team & Enterprise plans) can opt out of data training, and API calls aren’t used to improve models. However, data still passes through OpenAI’s servers and may be retained for abuse monitoring (typically 30 days). Without enhanced plans – ChatGPT stores data indefinitely, even after deletion – meaning there is no ZDR or Zero Data Retention in place.

Anthropic (Claude): Similar to OpenAI, enterprise customers can prevent their data from being used for training. Anthropic retains conversation data for safety monitoring but doesn’t use it for model improvement.

Google (Gemini): Google’s enterprise offerings include data residency options and commitments not to train on customer data, though specific retention policies vary by service tier.

The key concern isn’t necessarily that these companies will misuse your data, but that it exists outside your direct control and could potentially be subject to government requests, data breaches, or policy changes.

With the majority of these cloud-based LLMs you must take out an Enterprise or Team enhanced plan to ensure a data policy or zero data retention with the platform. You also need to consider where your data is routing through when utilising these tools – as most LLMs will be sending your data to a server in the US. Some let you change regions to EU based infrastructure – however this is a question you need to be asking your AI consultancy from the start to avoid any policy issues further down the line.

Deployment and Maintenance: Effort vs Convenience

Local LLMs: Higher Initial Investment, Greater Control

Setting up local LLMs requires:

- Hardware investment: Decent performance needs 16-32GB RAM minimum, with high-end models requiring powerful GPUs for performance

- Technical expertise: Someone needs to install, configure, and maintain the systems

- Ongoing updates: Regular model updates and security patches

- Scaling challenges: Adding capacity means buying more hardware

A medium-sized accounting firm might spend £5,000-£30,000 on hardware and dedicate 10-20 hours monthly to maintenance, but they gain complete control over their AI capabilities.

It is possible to host, and train a local LLM on a lesser machine – however performance and speed will be severely impacted.

Cloud-Based LLMs: Instant Access, Ongoing Costs

Cloud based LLM solutions offer:

- Immediate deployment: Sign up and start using AI within minutes

- No hardware costs: Pay only for usage

- Automatic updates: Always access to the latest models

- Infinite scaling: Handle any workload without infrastructure concerns

However, costs can add up quickly. A business processing 1 million tokens monthly might pay $10-$100 per month depending on the API requests, e.g. image/video or text production – along with the context windows (how much text or context you are putting in to the LLM and what you get out of it) with costs scaling directly with usage.

Performance and Capability Comparison

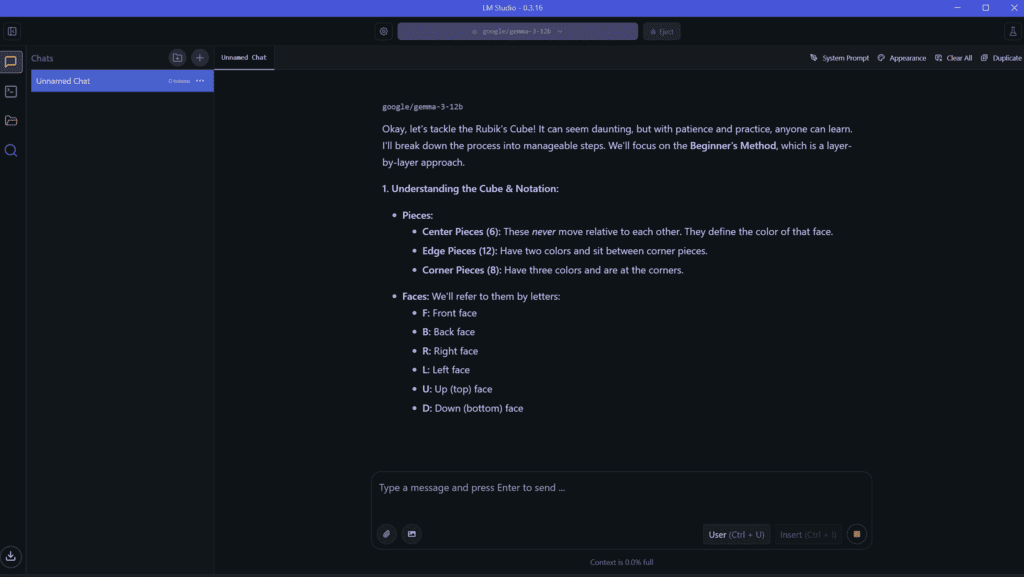

Local LLMs: Good Enough for Most Tasks

Modern local models like Llama 3.1, Qwen 2.5, and Phi-3 can handle:

- Document summarisation

- Customer service automation

- Content generation

- Basic analysis tasks

Performance depends on your hardware, but mid-range setups can run models comparable to GPT-3.5 quality.

New OpenAI Models [Update 5th August 2025]

As of 5th August 2025 – OpenAI has released two new open-weight models. The first of its kind since OpenAI released GPT-2. These models GPT-OSS 20B and GPT-OSS 120B are both comparable in reasoning, coding and agent based tasks to o3-mini and o4-mini respectively.

This means that these models can be downloaded via a platform such as Hugging Face, LLM Studio, vLLM, AWS or Azure and fine-tuned to your business needs. You can read more about these models on our blog post “OpenAI Releases GPT-OSS 20B and 120B: A New Era for Open-Weight Reasoning Models”.

Cloud-Based LLMs: Cutting-Edge Performance

Cloud providers offer:

- Latest model architectures: Access to GPT-4.5, Claude Opus 4, and Gemini Pro 2.5

- Specialised capabilities: Advanced reasoning, code generation, multimodal processing – each model has its own characteristics and capabilities – e.g. o3 for coding/reasoning

- Reliability: Enterprise-grade uptime and support

For complex tasks requiring the highest AI performance, cloud solutions currently maintain an edge.

AI Automation Strategies by Business Type

Small Businesses: Cloud-First, Local Later

Most small businesses should start with cloud-based LLMs due to:

- Lower upfront costs (pay for LLM plan and API token usage)

- Faster implementation

- No technical expertise required

- Easy to scale up or down

A small marketing agency might use Claude for content creation and ChatGPT for customer support within their AI automations, spending £50-£200 monthly while avoiding any infrastructure headaches.

Compliance-Heavy Industries: Local LLMs as Competitive Advantage

Healthcare, legal, and financial services often find local LLMs essential:

Healthcare Example: A medical practice can use local LLMs to analyse patient records and generate treatment summaries without violating HIPAA (note: HIPAA applies in the US; in the UK, similar standards include GDPR and NHS-specific rules) regulations or risking patient data exposure.

Legal Example: Law firms can leverage local AI for document review, contract analysis, and legal research while maintaining solicitor-client privilege.

Financial Example: Investment firms can analyse market data and generate reports using local models, ensuring proprietary trading strategies remain confidential.

Security-Conscious Teams: Hybrid Approaches

Many security-focused organisations adopt a hybrid strategy:

- Local LLMs for sensitive data processing

- Cloud LLMs for general tasks and external communications

- Air-gapped systems for the most critical applications

A cybersecurity firm might use local models for threat analysis while leveraging cloud AI for marketing content and customer communications, but an air-gapped system for any critical algorithms, or testing systems.

Making the Right Choice for Your Business

Choose Local LLMs If:

- You handle sensitive or regulated data

- Privacy and compliance are non-negotiable

- You have technical expertise in-house

- Long-term cost control is important

- You need guaranteed availability

Choose Cloud LLMs If:

- You need immediate AI capabilities

- Technical maintenance isn’t feasible

- You require cutting-edge performance

- Usage is unpredictable or seasonal

- Budget flexibility is more important than total cost

Consider a Hybrid Approach If:

- You have mixed use cases

- Some data is sensitive, some isn’t

- You want to start simple and evolve

- You need both security and performance

If you are unsure of where you fit, or have questions about what stack is right for your business. Contact us for a free consultation.

Looking Forward: The AI Deployment Landscape

The gap between local and cloud LLM capabilities is narrowing rapidly. Local models are becoming more powerful while requiring less hardware, making them accessible to smaller businesses. Meanwhile, cloud providers are improving their privacy offerings and reducing costs.

Emerging trends:

- Smaller, more efficient models making local deployment easier

- Improved privacy controls from cloud providers

- Edge AI solutions combining local and cloud benefits

- Regulatory requirements potentially mandating local processing

Your Next Steps

- Assess your data sensitivity: What information does your AI need to process?

- Evaluate your technical capabilities: Can you manage local infrastructure?

- Calculate your usage patterns: How much AI processing do you expect?

- Consider compliance requirements: What regulations apply to your industry?

- Start small and scale: Begin with one approach and expand based on results

Conclusion: Strategy Over Technology

The choice between local and cloud-based LLMs isn’t just about technology, it’s about aligning your AI strategy with your business goals, risk tolerance, and growth plans.

For most businesses, starting with cloud-based solutions provides the fastest path to AI automation benefits. As your AI usage grows and your requirements become clearer, you can then evaluate whether local deployment makes sense for some or all of your use cases.

The key is to remain flexible and informed. The AI landscape is evolving rapidly, and today’s decision doesn’t have to be permanent. Focus on getting started with AI automation in a way that fits your current needs while keeping future options open.

Remember: the best AI deployment strategy is the one that actually gets implemented and delivers value to your business. Don’t let perfect be the enemy of good when it comes to embracing AI automation in your organisation.

Ready to implement AI automation in your business? Consider starting with a small pilot project using cloud-based LLMs to gain experience, then evaluate whether local deployment makes sense for your specific use cases and compliance requirements.

About the author

Malcolm Gibb - Founder & CEO // flowio

Hi 👋, I'm Malcolm - Founder of flowio. I founded flowio after 15 years of leading performance marketing agencies. flowio exists to help businesses combine AI, automation and smart development solutions to solve critical business challenges. The content that you read here in the flowio blog is written by myself and based on experiences, insights and topical content from working with our clients and building automation solutions.

Looking to speak to an expert to help your business scale? Whether you are starting your journey into AI strategy or need a full done-for-you automation solution, book a chat with us to discover where the opportunity exists for automation solutions within your business.